"Creating Organic AI" Light Processors and Organic

The true revolution of Organic Artificial Intelligences

9/3/20255 min read

We have been developing some research for a few years, even before becoming the company we are, when it was just the CEO and his initial research team formulating ideas and researching voiceovers. One of the researches and studies we did was on the light processor and the organic processor with artificial organic cells, similar to neurons, but there is also research on cell development in the laboratory.

General Scope of the Vision

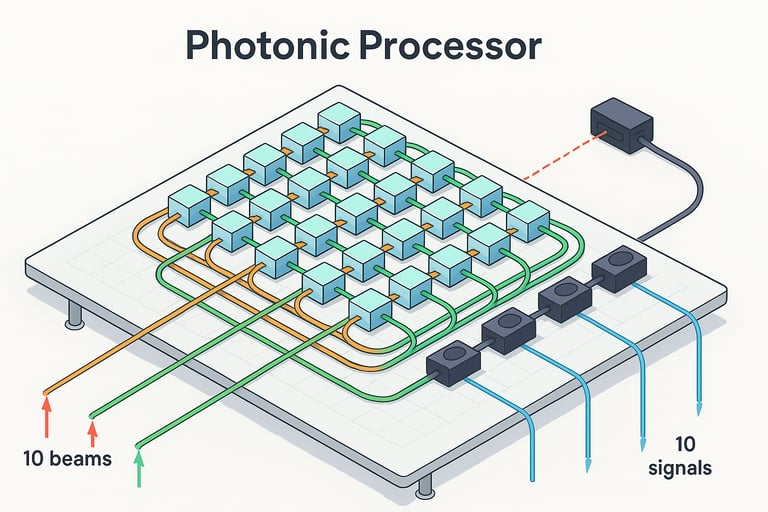

Foton Processor

Our thesis was that light (photon) processors could be the basis for creating computers and systems entirely powered by light (photon), generating higher speeds, lower noise, lower energy consumption, and greater overall capacity, resulting in improved speed and data processing. The biggest challenge in this case was not only creating a viable prototype—a very large one we had already achieved using a simple concept just to demonstrate that it works—but creating something different and functional would require a new architecture and a new programming language. Even using existing languages and architectures, the technological leap is much greater.

Organic Processor = Organic Artificial Intelligence

Currently, existing Artificial Intelligences are basically search and text engines that use probability and statistics to retrieve pre-existing information. The limitation to what can be expected from a "thinking" AI that can reason and literally think would be synthetic organic material. There are already many studies related to the use of living tissue, such as neurons grown in the laboratory for information processing. The most ethical approach would be to ensure that organic tissue is used only through artificial creation and not through the use of animals. Perhaps in the first data processing experiments, it could be used, but not for "AI." An organic AI will provide logical responses and thought, but it will not be as fast as traditional or light-based AI, as its use is completely different.

Real application

Real-life application of the Light Processor

The real application in the short term is limited to their use as processors in traditional architectures to increase processing capacity. Our thesis was that light (photon) processors could be the basis for creating computers and systems entirely powered by light (photon), generating higher speeds, lower noise, lower power consumption, and greater overall capacity, resulting in greater speed and data processing. The biggest challenge in this case was not only creating a viable prototype—a very large prototype that we had already achieved using a simple concept simply to demonstrate that it works—but creating something different and functional would require a new architecture and a new programming language. Even using existing languages and architectures, the technological leap is much greater. In the architecture I designed as a proof of concept, it would be a plate, on top of it several cubes that reflect light and pass light through it, as if they were crystals, below and on the sides there would be sensors that identify the light output, light emitted by 10 laser beams, the wires could be fiber optics or just the laser path reflected or refracted by the cubes, each cube could transmit 3 or 4 pieces of information at a time, but if you use the color spectrum, you can scale 4¹¹ in each cube, that's where the light passes, generating in each cube as if they were 4 zeros and ones or 4¹¹ data transmission and storage options, calculating a more massive volume of information in a much shorter time, but in traditional architectures, without being a quantum processor.

“4¹¹” ( 411=2224^{11} = 2^{22} 411=222 ≈ 4.19 million states ≈ 22 bits ) is a great theoretical upper bound, but the practical limit comes from SNR, crosstalk, non-linearities.

What already exists today and tests we have done to validate our model

Reference Architecture

Optical I/O

External lasers (C-band) with scalable power budget, coupled via low-loss grating couplers (<1 dB target). Nature

10 input beams (as you proposed), each multiplexed into 16–32 λ.

Computational Core

MZI mesh (Clements topology) sized for N×N (e.g., 64×64) with electro-optic phase shifters (TFLN or Pockels in Si: >100 GHz bandwidth, low Vπ). light-am.com, Wiley Online Library

Operation Modes:

Analog ONN: intensities encode values; MACs performed “at the speed of light”.

Systolic/OTT (diffraction/free-space): alternative inspired by companies using free-space optics/diffraction. Lightelligence

Readout and Digitization

Ge/Si photodetectors per λ (or summed across channels, depending on layer), TIA + ADC.

Closed-loop self-calibration (test-tone injection, phase estimation, thermal correction). Physical Review, arXiv

Electronic Control

MCU/FPGA configures phases/attenuations; performs training or quantization off-photonic; executes nonlinear functions (if needed) and coordinates stochastic rounding and compensation.

Interconnection

Roadmap for co-packaged optics (CPO) when moving to clusters (1.6 Tb/s per port and beyond).

Algorithms and “Programming Language”

Capacity and Error Rate

Channel capacity: C=Rs⋅log2(M)C = R_s \cdot \log_2(M)C=Rs⋅log2(M) (bits/s).

Aggregate: Ctot=Nbeams Nλ Npol Rslog2(M)C_\text{tot} = N_\text{beams}\, N_\lambda\, N_\text{pol}\, R_s \log_2(M)Ctot=NbeamsNλNpolRslog2(M).

Target BER: 10−6∼10−910^{-6} \sim 10^{-9}10−6∼10−9 (with light FEC); SNR required for PAM-4 typically ~14–18 dB depending on receiver. (Guideline values; measure in-silico and on-bench.)

Power Budget (Example)

Laser per λ: 10 mW in the waveguide.

Typical losses: coupling 1 dB + propagation 1–3 dB + mesh 2–6 dB + WDM filters 1–2 dB. Total target 5–10 dB → 1–3 mW at PD, sufficient for >50 Gbaud with modern TIAs. Nature

Energy per Operation

TFLN modulators: reported ~0.2 pJ/bit (lab) to few pJ/bit in practice.

Recent ONN prototype: ~1.2 pJ/op (65.5 TOPS/78 W).

Realistic MVP system goal: 1–10 pJ/MAC initially, optimized later. Optica

Phase A — Bench (0–1 layers)

PIC 8×8 or 16×16 MZI (SiN + TFLN), 8–16 λ @ 25–50 Gbaud, PAM-4.

Auto-calibration firmware (phase loop + PD “tap” readout).

Goal: demonstrate >1 Tb/s linear throughput and BER <10⁻⁶ with light FEC.

Phase B — ONN 64×64

Package 10 beams × 32 λ (as per concept).

Hybrid pipeline with off-chip nonlinearity; measure end-to-end pJ/MAC.

Goal: inference on small models (compact CNNs/transformers) with near-electronic accuracy. Reuters, physicsworld.com

Phase C — Interconnection/Scaling

Explore co-packaged optics for clusters and multi-chip meshes (CPO 1.6 Tb/s per port).

Goal: scale bandwidth and reduce backplane energy/bit.

Choose N and WDM (e.g., N=64, Nλ=32, Rs=50 Gbaud, PAM-4).

Train the model normally (PyTorch), then quantize to ABFP-16/FP8. physicsworld.com

Extract matrices of each linear layer; factor via Clements → θi,ϕi{\theta_i, \phi_i}θi,ϕi of the MZIs. spj.science.org

Schedule phase programming (order minimizing drift), heat to stable setpoints.

Inject input vectors as intensity envelopes into chosen λ (digital mapper → DAC → modulator).

Read PD/ADC, apply nonlinearity digitally, repeat for next layer.

Continuous calibration: inject pilots on reserved λ; adjust θ, ϕ with gradient estimated from measurement.

That would be the idea based on what already exists, but what we are doing brings something very different. We will bring the tests and results in addition to practical demonstrations at events that we will do in person and live together.

Practical use

Our light processor model could be used to calculate and simulate things like orbits, gravitational fields and mass, velocity, research theoretical particles like gravitons (creating and proving technologies), and enable integration with an organic processor. Using AI and thought simulation, it guides simulations, enabling the modeling of a usable and applicable model, converting it to actual needs rather than merely speculative ones. The organic processor and organic artificial intelligence can be used to make rational decisions and simulate mass behavior (masses of people, communities, human behavior), such as the best route or outcome based on questions of human behavior or human need.

Production for aerospace and military use would be more expensive but would require very large capacities. However, it is possible to reduce the cost per processor so that they can be used commercially in large quantities and diverse models for specific areas, such as everyday data processing, but with high performance in terms of information volume and speed.

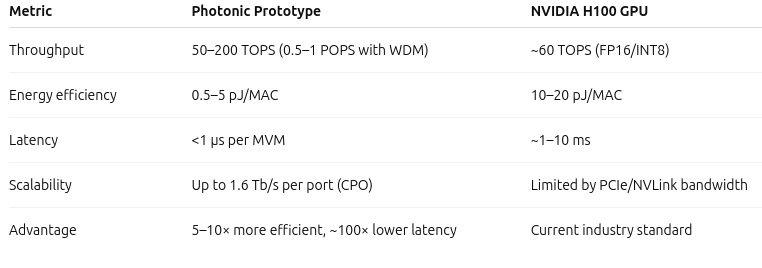

Examples of the tests we did with a standard architecture model compared to one existing on the market, the expected capacity of our architecture is much greater than that.

⚠️ Disclaimer: SpaceJX's content is for informational purposes only and does not constitute an offer to sell or a solicitation to buy any securities. Forward-looking statements are subject to risks and uncertainties. None of the technologies described have regulatory approval.